What is Google Veo 3?

Google Veo 3 is Google’s latest state-of-the-art generative video model, developed by the DeepMind team. Unveiled around Google I/O 2025, Veo 3 represents a major leap in AI video generation by introducing native audio and dramatic improvements in visual fidelity. In practical terms, Veo 3 can create short video clips (up to ~8 seconds long) from a text prompt (and optionally image prompts), complete with synchronized sound effects, background audio, and even spoken dialogue. This “video meets audio” approach sets Veo 3 apart from earlier text-to-video models that produced visuals only. Google touts Veo 3’s exceptional prompt adherence and stunning cinematic outputs that excel at physics and realism. In other words, the model tries to closely follow the script or scene you describe, resulting in mini-movies with coherent motion and even characters that can talk.

Under the hood, Veo 3 builds upon Google’s generative AI research (its predecessors include Veo 2, Imagen for images, and Lyria for music). It has been redesigned for greater realism – supporting up to 4K resolution output – and it features a better understanding of real-world physics (e.g. natural movement of objects, camera shakes). Prompt fidelity is also improved, meaning if you give it a detailed scenario or lines of dialogue, it’s more likely to match those instructions closely. Crucially for creators, Veo 3 can generate audio tracks in sync with the video: environmental sounds (like wind, footsteps, engines), sound effects, background music, and even character voices for dialogue. This all-in-one generative capability (video + audio together) is a game-changer – previously, creators using AI might have had to generate visuals and then separately add voiceovers or sound effects. With Veo 3, you can literally include dialogue or sound cues in your text prompt, and the model will produce a clip that “speaks” and sounds as described.

How does it work? From a user perspective, you enter a written prompt describing the scene you want. For example, “A wise old sailor on a ship at sea gives a monologue, with waves crashing and seagulls crying in the background”. Veo 3 will attempt to generate an 8-second video of exactly that – the old sailor’s lips moving in time with the monologue, realistic ocean waves in view, and audio of the sea and seagulls. Google has demonstrated such examples, and early users report the results are impressively coherent. In one demo, Veo 3 generated a clip of an old bearded sailor on a ship, muttering about the untamed ocean, with the camera capturing the cinematic details and the sailor’s voice audible over crashing waves. Another example shows a forest scene with an owl and a badger conversing, complete with owl hoots and rustling leaves in the audio. This level of multimodal output – essentially mini film scenes on demand – has huge implications for digital content creators.

A frame from a Veo 3-generated video: an old sailor character, complete with lifelike face, lighting, and synchronized audio for his dialogue. Veo 3’s strength is cinematic realism – it can produce characters and scenes with an impressive level of detail and mood, all from a text prompt.

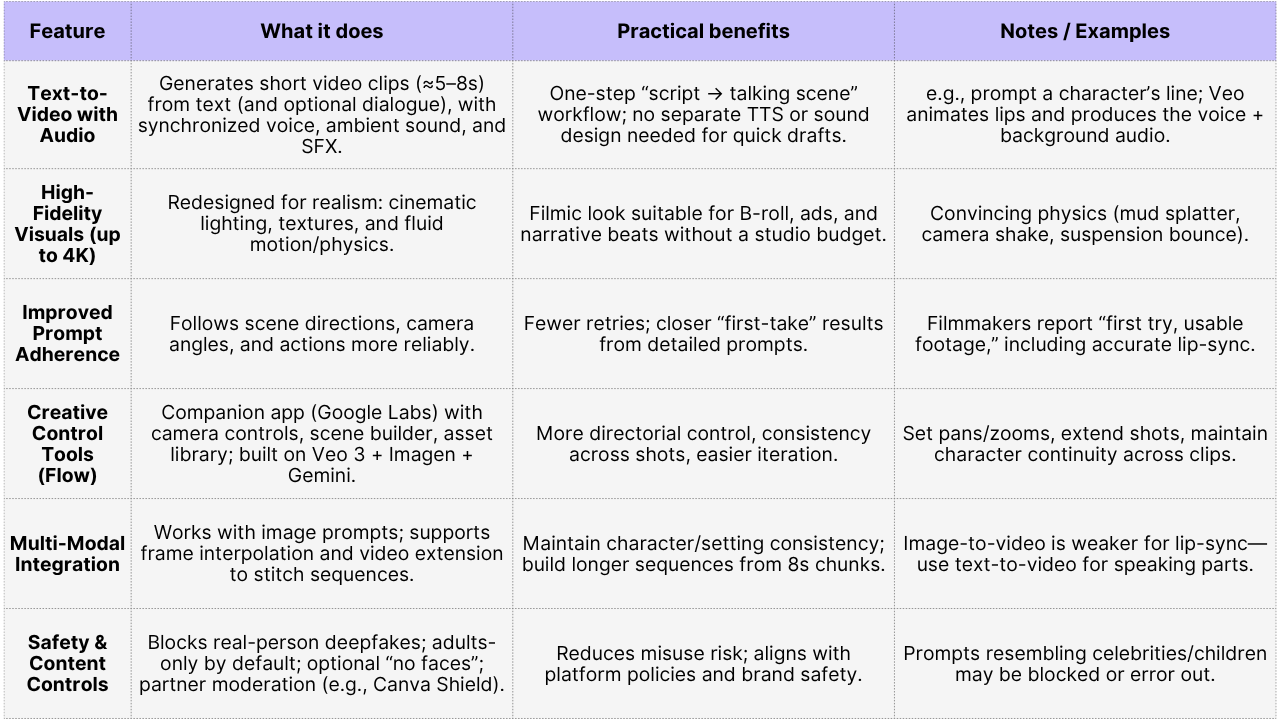

Key Features and Capabilities

Google’s Veo 3 is the first AI video model that truly feels like a mini film crew on demand: it turns a written scene (and even quoted dialogue) into a high-fidelity clip with synchronized voice, ambient sound, and cinematic motion—no separate TTS or sound library needed. Built for 4K realism and better physics, it follows shot directions and actions with far fewer retries, and pairs with Google’s Flow app for camera moves, shot continuity, and fast iteration. For filmmakers, YouTubers, and small teams, that means story ideas jump from script to screen in minutes—not weeks.

To help you put Veo 3 to work immediately, the table below translates each headline capability into practical benefits, creator tips, and gotchas—so you can plan prompts, control shots, and assemble longer sequences with confidence.

Finally, it’s important to understand the current limitations: Veo 3 is limited to short clips (5–8 seconds) per generation. This is by design – generating long-form video continuously is computationally intensive and also raises more chances for errors to accumulate. So, creators typically work in snippets: 8-second scenes that can be later edited together. Additionally, Veo 3 is still in preview (as of mid-2025) for some features; for example, vertical 9:16 aspect ratio is not yet supported in the preview model. So, it’s optimized for standard 16:9 widescreen at the moment. We expect these constraints to ease in future updates (e.g., longer durations as models improve), but for now, planning content around 5–8 second beats is part of the workflow.

Pricing and How to Access Veo 3

One thing to note: Veo 3 is not a standalone app you download – it’s a model accessible via various Google services and partners. There are a few routes to use it, each with different pricing considerations:

- Google Cloud Vertex AI (API access): For developers or technically inclined creators, Google offers Veo through its Vertex AI platform. Here, you pay per generation (per second of video) without any subscription. Official pricing for the previous model (Veo 2) was about $0.50 USD per second of generated video, i.e. roughly $4 for an 8-second clip (and $30 for a minute). This was reported when Veo 2 became generally available. Veo 3’s pricing on Vertex AI hasn’t been explicitly published yet (as it’s in preview), but it’s likely in a similar ballpark (the cost reflects the high compute needed for video+audio generation). Some users on Reddit using the API reported costs like ~$30 for a few iterations of a short film. The good news is the API model will refund your credits if a generation fails with no output (though partial issues like missing audio might still cost credits). If you just want to experiment lightly, Google Cloud does provide $300 free credits for new accounts which could cover dozens of short clips.

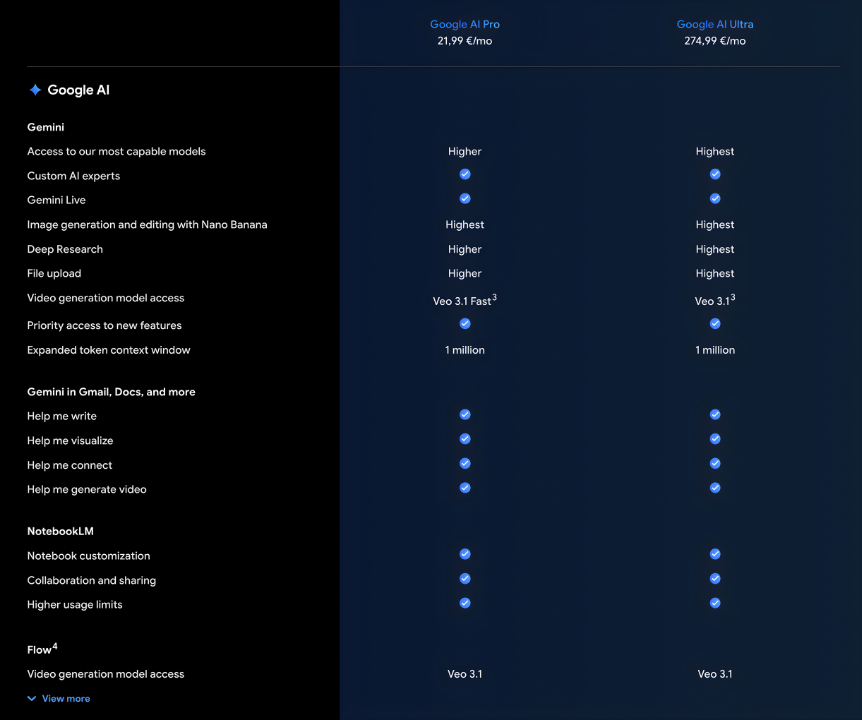

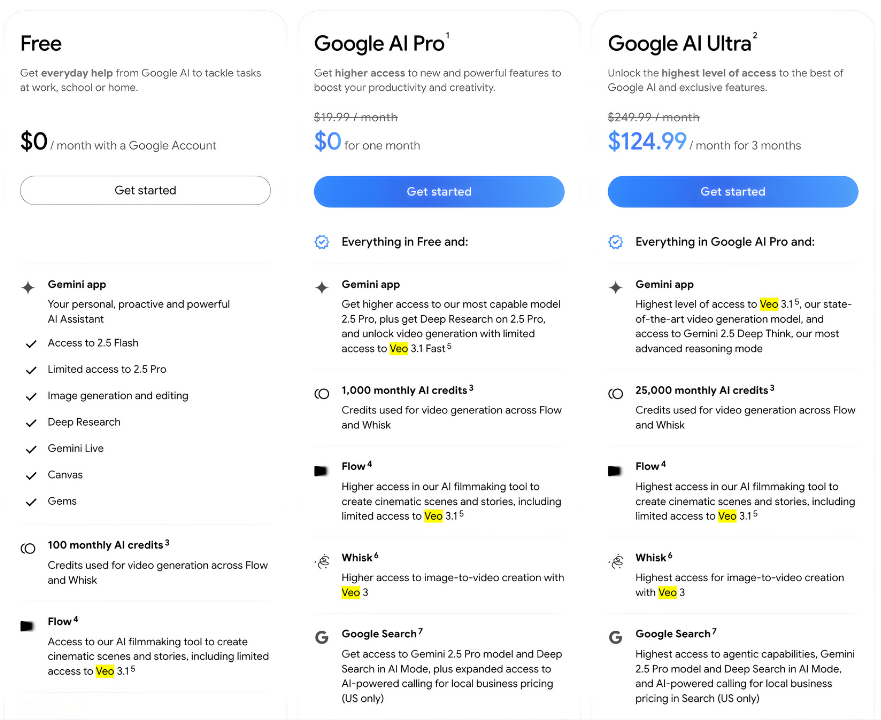

- Google AI Pro and Ultra (Consumer subscriptions): Recognizing that many creators are not developers, Google introduced two subscription plans:

- Google AI Pro – priced at $19.99/month (often with a free trial month). This plan includes access to AI features like Flow, Google’s Gemini chat, NotebookLM, etc. It gives a monthly allowance of generations (Pro users get 100 video generations per month in Flow). However, Veo 3 with audio was initially only in “early access” for Ultra, so Pro users might be using Veo 2 or a limited version for now. Google AI Pro is described as the next-gen of their AI services for individuals, comparable to offerings like ChatGPT Plus but across Google’s ecosystem.

- Google AI Ultra – priced at $249.99/month (targeted at serious creators and pros). Ultra includes everything in Pro plus the latest-and-greatest models at higher limits. Ultra subscribers got early access to Veo 3 including the full audio generation capabilities. They also get a large bucket of “AI credits” that can be used across Google’s AI tools (Flow, image gen, etc.). Early reports say Ultra comes with 12,500 credits per month, and each Veo 3 generation costs 150 credits. That works out to ~83 video generations per month on Ultra. In effect, Ultra users are paying about $3 per 8-second clip (though the first three months have been discounted 50% to ~$125/mo as a promo). Ultra also bundles other perks like large Google Drive storage (reportedly 30 TB+) – clearly aimed at prosumers who will generate and store a lot of content.

- Google AI Pro – priced at $19.99/month (often with a free trial month). This plan includes access to AI features like Flow, Google’s Gemini chat, NotebookLM, etc. It gives a monthly allowance of generations (Pro users get 100 video generations per month in Flow). However, Veo 3 with audio was initially only in “early access” for Ultra, so Pro users might be using Veo 2 or a limited version for now. Google AI Pro is described as the next-gen of their AI services for individuals, comparable to offerings like ChatGPT Plus but across Google’s ecosystem.

- Third-Party Platforms (Canva, Leonardo.ai, etc.): Google has wisely partnered with creator-focused platforms to integrate Veo 3. One major integration is Canva, the popular online design tool. Canva launched a feature called “Create a Video Clip” in June 2025, which is powered by Google’s Veo 3 model. From inside Canva’s interface, users can enter a prompt and generate a cinematic 8-second video with sound in a couple clicks. This makes AI video generation accessible to millions of Canva’s non-technical users. The feature is available to Canva’s paid tiers (Pro, Teams, Enterprise, and Nonprofits) and initially allows 5 video generations per month for those users. Essentially, if you already have Canva Pro (which is about $12/month or comes with many brand kits), you can dabble with up to five Veo 3-powered videos monthly at no extra cost. This is a big deal for small businesses and marketers on Canva – they can now generate short promo clips or dynamic social posts directly in the tool they use for other content. Canva noted they are working to expand this limit beyond 5 as the feature scales. Another integration is Leonardo.ai, a platform known for image generation. Leonardo announced that paid users can now tap into Veo 3’s video capabilities as part of their workflow. So, if you subscribe to Leonardo’s service (which is much cheaper than Google’s Ultra), you can experiment with Veo 3 outputs there, likely under some credit system. These third-party offerings provide a more affordable entry point to Veo 3 (with usage caps) – great for solo creators who don’t want to spend hundreds per month.

In summary, for a casual creator or small team, the easiest ways to try Veo 3 right now are: Canva (Pro) if you already use it, or the one-month Google AI Pro trial (to access Flow). Canva’s route gives a few videos free, integrated in a friendly editor. The Google AI Pro route would cost $20 (after trial) and gives 100 generations/month within Flow – but note, unless Google has fully opened Veo 3 to Pro, you might be limited to Veo 2 or have no audio on that plan until Veo 3 exits preview. If audio and top quality is a must and you’re okay with the cost, the Ultra plan is the direct route to get unlimited access to play with Veo 3. For developers or those wanting programmatic control, the Vertex AI API with pay-as-you-go pricing might be more economical (especially if you only need a few specific clips and can optimize your generations). For instance, one user generated an entire polished ad via the API and reported it cost them only $7.25 in credits (they were likely on Ultra credits, but equivalently ~7 or 8 bucks) – which, compared to a five-figure production budget, is astounding.

Veo 3 in Action – Use Cases for Creators

How can solo creators, YouTubers, influencers, freelancers, coaches, indie e-commerce sellers, and small teams actually leverage Veo 3? Let’s explore some high-impact use cases and examples:

YouTube Video Creators and Storytellers

For YouTubers and independent filmmakers, Veo 3 opens up a new world of AI-generated B-roll and short film scenes. Imagine being a content creator who does storytelling or educational videos – instead of using stock footage or static images to illustrate a point, you can now generate a custom visual. For example, a history YouTuber talking about ancient sailing could generate a quick clip of a sailing ship in a storm with an old captain at the helm, dramatizing the narration. A horror story narrator on YouTube could create eerie atmospheric shots to accompany their voiceover. These short cutaway clips can make videos far more engaging.

Creators have already started experimenting with this. One filmmaker used Flow (with Veo 3) to produce an AI short film akin to a fantasy “Game of Thrones” style sequence. By keeping a consistent base prompt and iterating, he was able to maintain the same characters across multiple 8-second segments and stitch together a narrative. Veo 3’s consistency isn’t perfect on its own, but by using the same prompt keywords or reference frames, Flow helped carry over character looks from scene to scene. The result impressed viewers – many couldn’t believe the entire cast and scenery were AI-generated in an afternoon. In fact, a Redditor shared a short film (“Plastic”) made with Veo 3 where office scene clips were generated on the first try and had perfectly lip-synced one-liners. This demonstrates how a solo creator can produce skits or narrative videos with zero actors or camera crew – essentially letting Veo handle the cinematography. As one comment put it: “Created by one person and not a thousand” in the end credits. That speaks volumes about how this tech empowers individual creators to punch above their weight.

YouTube creators can also use Veo 3 to generate intros/outros or special effects for their videos. For instance, a tech reviewer could generate a fun 5-second animation of a robot talking, as a channel intro. A travel vlogger could spice up a montage by generating an AI drone shot over a city (with Veo 3’s realism, it might pass for real drone footage in stylized contexts). Music video creators might use it for abstract cut scenes or lyric interpretations. We are already seeing music visualizers and experimental shorts being made entirely with AI video tools like Runway; Veo 3 can achieve even more cinematic results, which could lead to an influx of AI-crafted music videos on YouTube and Vimeo. (In fact, observers predict that within a few years, YouTube will be filled with these AI-generated segments – and to some extent, it’s already begun.)

For purely entertainment-focused creators (e.g. animators, short film makers), Veo 3 is almost like having a universal “animation studio” on call. It lowers the barrier to produce fictional scenes. Of course, limitations apply – you won’t be making a 15-minute continuous video with it today, but you can certainly make a series of vignettes or a multi-part story. Creators have been successful doing ads and trailers: one user made a fake movie trailer using a series of Veo 3 clips, getting a result that some said looked better than SyFy Channel CGI from a decade ago (a tongue-in-cheek compliment, but it underscores that the quality is within TV-render range). For YouTubers in storytelling niches (horror, sci-fi, fantasy) or commentary niches that need visual aides, Veo 3 could become a go-to tool.

Content Repurposing for Social Media

In the world of fast-paced content marketing, repurposing is king. Veo 3 presents a novel solution for turning existing content (text, audio, ideas) into eye-catching video clips for platforms like Instagram, TikTok, Facebook, and LinkedIn. If you’re a podcaster or coach, for example, you likely have great audio content that could use a visual companion. Canva’s integration specifically highlights “turning podcast moments into social clips”. With Veo 3 powering that, you could take a key quote from your podcast episode, input a prompt like “video clip of a radio studio with animated sound waves as [host name] speaks inspiring quote X” and generate a branded clip for Instagram. This lowers the effort to produce audiograms or quote graphics – now you get a full video with motion and sound.

For bloggers or newsletter writers, think about transforming an article into a short promo video. Let’s say you wrote a blog “5 Tips for Small Business Success.” You could prompt Veo 3 for each tip: e.g. “A busy cafe owner flipping an ‘Open’ sign (Tip: Start early each day)”, “Close-up of hands typing on laptop analyzing sales chart (Tip: Know your numbers)”, etc., each as a quick 5-second visual. String those together (Canva or a simple editor can do this) and overlay text – suddenly you have a 30-second social video summarizing your blog post, generated in an afternoon. This is a very practical way small businesses can multiply their content reach using AI. It’s why keywords like “AI content repurposing tools” are trending – and Veo 3 fits that category by enabling the conversion of written content or ideas into polished video assets.

Additionally, Veo 3’s ability to generate vertical video will eventually be crucial for repurposing into Reels or TikToks. (As noted, the preview model is 16:9 only, but presumably vertical support will come soon given the dominance of mobile video.) Canva’s UI already is conversational/voice-enabled and could guide users through making a video for different formats. So a marketer could chat with Canva’s assistant: “Make a TikTok video about my product’s key benefit” – and behind the scenes Veo 3 might generate the core clip. With the multi-model approach (text + image + video AI in one place), Canva positions itself as a one-stop content studio. This trend hints at the future: all-in-one AI platforms (like Canva’s Magic Studio or Google’s own suite) where you can produce blogs, images, and videos in one workflow. Veo 3 is a key component in making that possible for video.

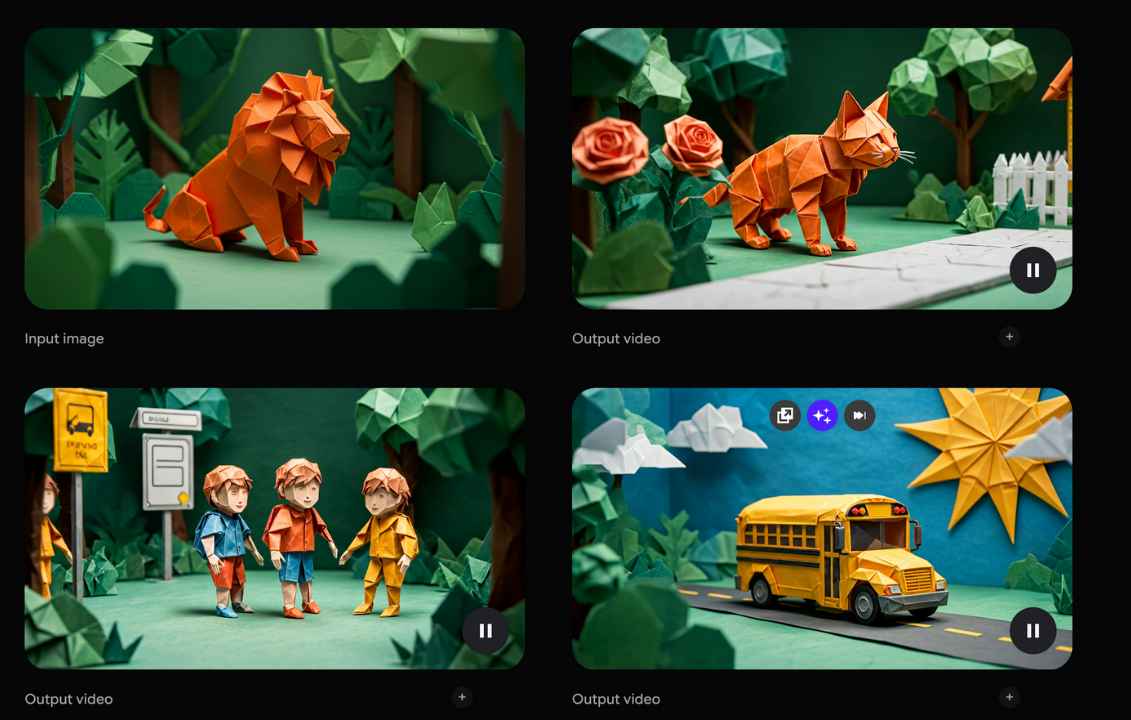

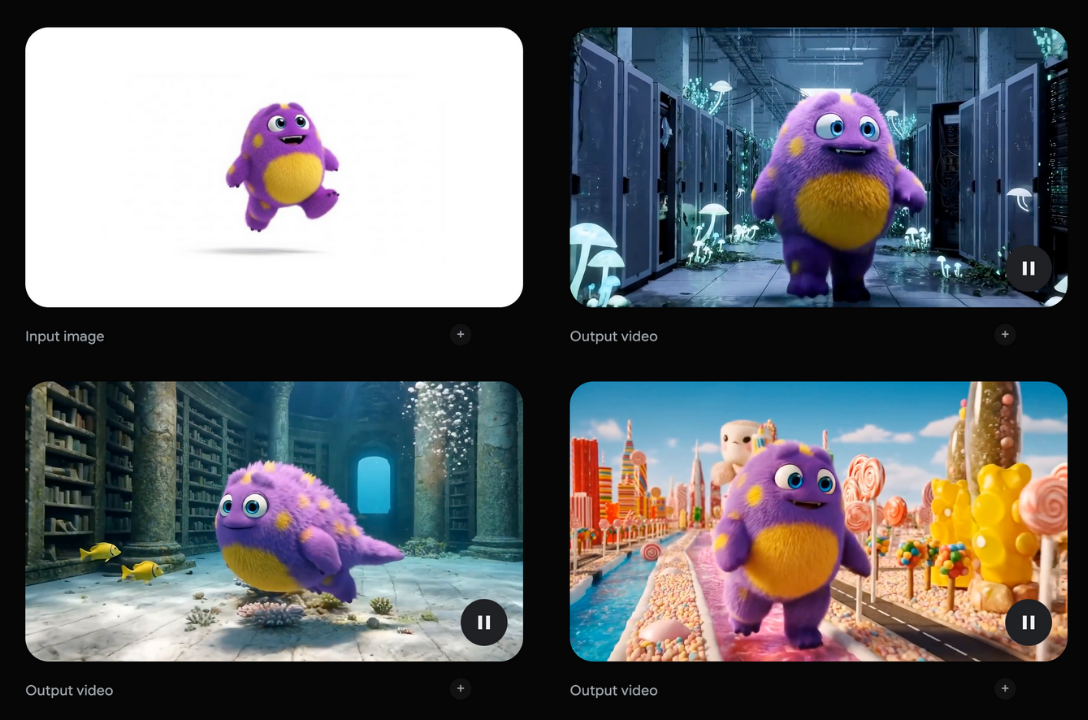

Example of Google's VEO 3 video Generation

Online Video Marketing and Advertising

Marketers and small business owners are arguably one of the audiences who stand to gain the most immediate value from Veo 3. Creating professional-looking promo videos or ads has traditionally been expensive – either you hire a production team or spend hours with complicated software and stock footage. Veo 3 turns a lot of that on its head. As one early adopter exclaimed, “It’s pure insanity – this ad would’ve cost at least $50–150K with a traditional agency, but it cost me 3 hours and $7.25 in AI credits”. This person generated a 15-second company advertisement using Veo 3 and was so impressed he joked about buying more Google stock afterwards. The ad apparently featured talking actors (“talking heads”) with acceptable lighting and delivery – basically something that would pass for a real commercial at a glance. Commenters noted how convincing the human expressions were in the video.

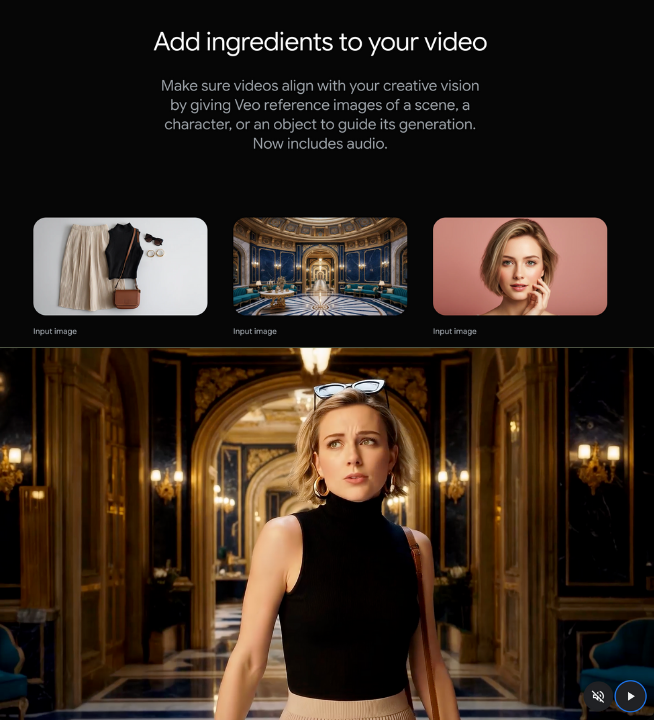

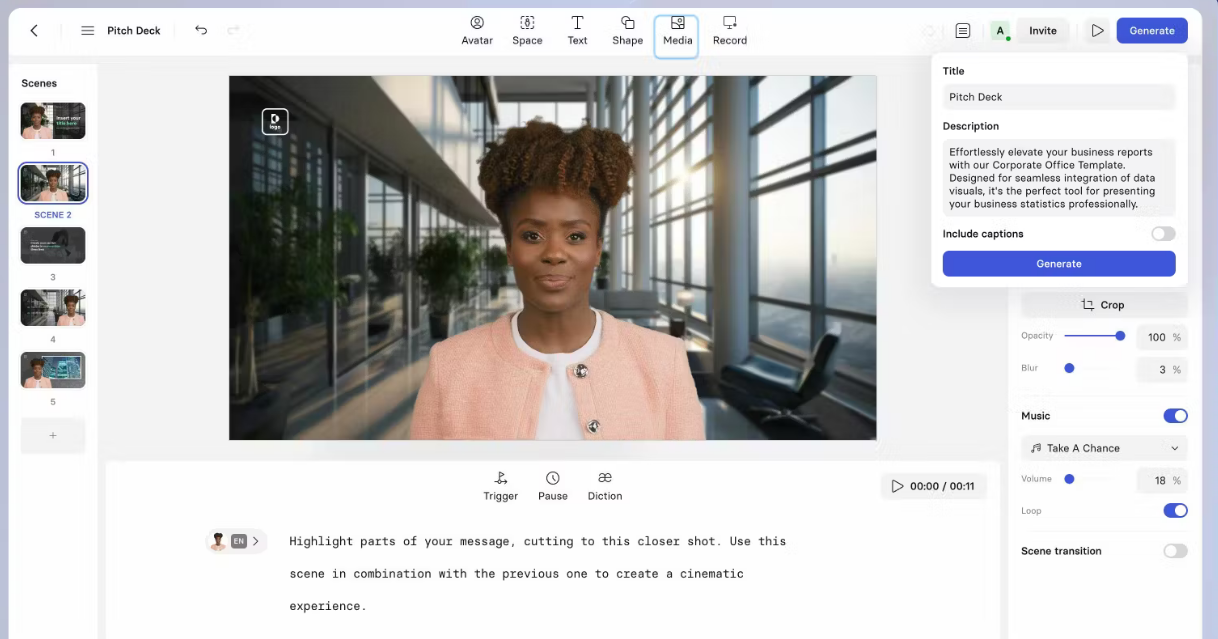

Canva’s integration of Veo 3 makes AI video easily accessible. Above: Canva’s “Create a Video Clip” feature in action – an 8-second scenic promo clip with text overlay, generated from a simple prompt. This kind of cinematic B-roll with on-brand text can be created in minutes, enabling small businesses and marketers to produce engaging video content without a film crew.

For social media ads, Veo 3 allows rapid A/B testing of ideas. You can generate a few variations of a product demo or lifestyle shot featuring your product, each with different atmosphere or messaging, then see which one resonates best. The speed is incredible: what might take weeks to coordinate a live-action shoot, you might do in an afternoon of prompt-crafting. And thanks to the audio component, your ads come with background music or jingle built-in (or you can instruct it to generate without music if you plan to add your own). Early experiments show it even handles talking customer testimonials: e.g., you could prompt “A satisfied customer says: ‘This gadget changed my life!’ while holding the product” and get a short clip of a person speaking that line. The realism of faces and speech is getting into somewhat uncanny territory, but as one Redditor observed, we’re basically at the point that many casual viewers “cannot tell what’s real”, especially if they’re not actively looking for AI artifacts.

Example of Google's VEO 3 video Generation

One limitation to note for marketers: brand consistency. If your brand requires a specific character (say a mascot or a spokesperson with a specific look), Veo 3 doesn’t yet allow fine-tuning on a specific face or exact logo usage. Everything is generated fresh from the prompt and random model creativity. However, you can describe a logo or overlay graphics via editing afterwards. For now, Veo’s strength is more in generic but high-quality footage rather than exact reproductions. So you might use it to generate beautiful footage and then composite your real product photos or logo on top in post-production. Canva’s workflow encourages this: after generating the clip, it opens in Canva’s editor where you can add your brand kit assets, text, additional music, etc. That way, you still maintain brand guidelines while using AI to handle the cinematography.

For indie e-commerce sellers who need video for product listings: imagine generating a glamour shot of your handmade jewelry in a scenic setting, or a model walking down a street wearing your designed dress – all without hiring models or renting locations. Veo 3 could output that “stock footage” tailored to your product’s vibe. It’s like having a virtual advertising agency. The Canva announcement explicitly targets this, saying “anyone can produce polished videos with high-quality visuals, realistic motion, and synchronized audio” easily. They position it as making video creation effortless for those without big budgets or editing skills – which describes most small biz owners and influencers.

Another use case: real estate marketing. An agent could punch in a description of a dream home interior or a neighborhood scene and get a quick video to include in a listing video or social post. While it may not reflect a specific property (and using AI in listings has disclosure considerations), it can be used for general marketing like “living in [City]” mood pieces.

It’s worth noting the flip side as well: Because the tech is so new, some audience members might react negatively if they realize an ad is AI-generated. There’s a bit of a stigma or skepticism in some consumer circles (“oh, this is AI, not real, skip”). One commenter pointed out “once people see it’s AI they turn off and you lose engagement”. This is something marketers will have to navigate. However, as another person replied, we saw similar reactions to AI images and those have diminished over time. It’s likely that AI videos will become normal, and only blatant failures will draw criticism, whereas good quality content (AI or not) will do its job. Marketers should ensure the messaging is strong and consider not leaning into any “gimmicky” AI tells. If done well, an AI-generated ad need not announce its origin – it’s just a compelling ad. In fact, an AI video that truly resonates emotionally (like the “plastic kid” story video where viewers felt genuine sympathy for the AI character) can engage audiences as much as real content. Story and creativity still matter – Veo 3 just handles the execution heavy-lifting.

Educational Content and Coaching

For educators, coaches, and communicators, Veo 3 provides a powerful way to visualize concepts that would otherwise be abstract or costly to illustrate. In online courses or educational YouTube channels, you can now pepper in short illustrative videos generated on-the-fly:

- Explaining Concepts: If a science teacher is explaining the water cycle, they could generate a quick animation of “water evaporating from an ocean, forming clouds, then raining on mountains” as a background visual. Or a history teacher talking about ancient Rome could generate a flyover of a Roman city or a reenactment snippet of a marketplace in that era. These bring lessons to life without having to search for existing footage. Veo 3’s prompt fidelity means you can describe the exact scenario you need. And since it can produce different art styles (even animated or painterly styles if prompted), one could tailor the visuals to be more illustrative and less photoreal if desired (to match a cartoon style for kids, for example).

- Training and How-To Videos: Coaches and online instructors often face the challenge of producing demonstration videos. With Veo 3, a cooking coach could generate a generic clip of a chef chopping vegetables or plating a dish to emphasize a step, rather than filming themselves from scratch. A fitness coach could generate an exemplar performing a yoga pose in a serene environment to insert into their video (though caution: AI humans might not be perfect for nuanced movements yet, but this will improve). Language coaches could create situational dialogue scenes – e.g., two characters meeting at a cafe – as listening comprehension exercises, with Veo’s generated voices providing the audio. The dialogue can even be non-English since the prompt could be in other languages (Veo likely can handle different languages in text for speech). This pairs with the fact that Google’s models usually support many languages for text; it’s not confirmed for Veo audio, but it’s plausible.

- Presentations and E-Learning: Small teams making e-learning modules or startups making pitch decks can use Veo to generate illustrative videos for their slides. Instead of a static image on slide 5, why not an 8-second looping video background? Google itself has hinted at this use – e.g., using “video clips in pitch decks or product teasers”. Students working on projects could also use it to create more engaging assignments (though that raises academic honesty questions if not original – but if it’s just for visual aid, it’s fine). In corporate training, an instructor could easily generate a scenario video (like a customer service role-play) to discuss in a workshop.

One real example: a creator named Henry Daubrez used Veo 2 previously to create a moving short film about love, and he’s now using these tools in his storytelling about his creative journey. This indicates even artists and literary educators are using AI video to explore themes and share ideas in a new medium.

Overall, Veo 3 stands to make educational content more immersive while significantly cutting production time. Educators do need to vet the outputs for accuracy (AI might hallucinate some visual details incorrectly – e.g., showing a 5-legged insect or a map with wrong labels – because it’s not a factual retrieval model). So, while it’s great for illustrative purposes, for factual visuals one should be careful. Google has a Responsible AI note that Veo may require special approval for content with people (especially children), which is relevant in educational contexts – e.g., if generating a video involving children, an educator might hit a block unless using an allowed setting or getting approval. This is simply something to be mindful of and can usually be addressed by adjusting the prompt (e.g., use adults to simulate a scenario or avoid realistic child depiction).

Indie Game Devs and Creative Storytelling

An interesting niche: indie game developers and storytellers could use Veo 3 to prototype or even produce assets for their narratives. For instance, a game dev could generate short cutscene clips or background animations for their game using Veo (rather than hand-animating or filming). While 8 seconds is short, clever editing can combine clips for longer scenes, and the 4K resolution support means it’s viable to include in higher quality projects. Also, folks who run tabletop RPGs or D&D campaigns have started using AI image generators to create scene art – with Veo 3, they could create looping scene videos to set the mood for their players (imagine a looping tavern scene with fireplace crackling audio). The cross-disciplinary creator – someone writing a novel, for example – could create a “book trailer” or animated concept art of their story with this tech.

In digital art and film festivals, we’re likely to see entirely AI-generated shorts where an artist’s role was mainly as director through prompts. Google’s own experimental film project “Flow TV” showcases clips made with Veo by various creatives. Tools like Flow will inspire more filmmakers to try hybrid workflows (part AI, part live action). One of Google’s collaborations was with filmmakers to understand how Flow/Veo can fit in – and they successfully produced short films mixing AI and other techniques.

Indie Brands and Social Storytelling

For indie brands and influencers building a narrative around their identity, Veo 3 can help tell brand stories. Think of it as creating your own commercials or story-driven content without an agency. A small sustainable fashion brand could make a poetic 8-second clip of “floating cotton fabric turning into a dress on a mannequin in a sunlit studio” to convey their values. A coffee roaster could generate artsy B-roll of “coffee beans flying in slow motion with abstract shapes” for a campaign about the aroma and energy of their brew. These kinds of creative visuals can set a mood on websites and social feeds.

Influencers and coaches who mainly talk on camera can augment their videos with illustrative AI cutaways (much like news shows cut to illustrative footage). If a life coach on Instagram is telling a motivational story about a person climbing a mountain (metaphorically), they could literally cut to a beautiful shot of a person hiking up a mountain at sunrise generated by Veo, making the storytelling more cinematic. Because Veo 33 can generate in different cinematic styles (e.g., “like a Studio Ghibli animation” or “in the style of a vintage documentary”), a creator can ensure the clip matches their storytelling tone.

Lastly, an emerging application is personalized content – you could generate videos tailored to a specific audience or even individual. For example, an indie children’s book author could generate a short video of the book’s characters and send it as a thank-you to people who bought the book. Coaches could generate visualization exercises for clients (e.g., “imagine yourself confidently giving that presentation” accompanied by a video of a person succeeding on stage).

Real-World Performance: What Creators Are Saying

No tool is perfect, and Veo 3 is very new – so what are users and early adopters finding in practice? The community buzz is a mix of astonishment at the capabilities and some candid notes about bugs and limits.

On the positive side, creators are calling Veo 3 “insanely good” and potentially game-changing. Many note how Google’s own demos didn’t do it justice – it was quietly released with little fanfare, but once people tried it, they realized it was far beyond what they expected. One comment likened it to OpenAI’s rumored video model (“Sora”) finally actualized – except Google hardly hyped it in advance. The organic marketing from user-generated clips has actually overshadowed official marketing; some of the best outputs people have shared (“fake ads,” short films, etc.) are more impressive than the formal examples shown at launch. This indicates that with creative use, Veo 3 can exceed even its initial showcase – always a good sign for a tool.

Quality of output:

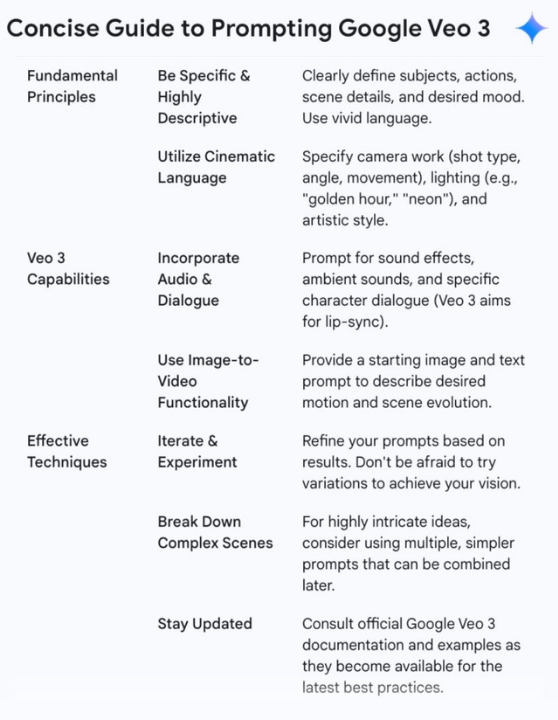

Many users report being genuinely fooled or on the fence about whether some Veo 3 videos were real or AI. For instance, in the ad example above, the commenter said it was the most convincing one they’d seen, especially noting the realistic people’s expressions. Another viewer, scrolling by an AI-made clip, thought it was just high-end video game graphics or a movie scene until they realized it was AI. The consensus is that Veo 3’s coherence (within 8 seconds) is very high: motion is smooth, camera work looks intentional, and subjects generally stay on model. This addresses a common issue seen in earlier gen models where things would morph oddly frame to frame. One user specifically praised the tracking and consistency of objects in Veo 3 clips – for example, a character or object stays the same shape as it moves, rather than warping (a big achievement in AI video). It’s not perfect, but a leap forward. A user named CodeSamurai shared prompt-writing techniques that helped get consistent results: structuring the prompt with sections (Subject, Context, Action sequence, Style, Camera) to guide the model. Using such methods, he was able to reliably produce sequences close to his vision. However, he did mention one quirk: the model “really wants to add subtitles to everything”! Indeed, several users noticed that if you include dialogue, sometimes Veo 3 will burn-in text subtitles in the video (perhaps due to training data that had subtitles). This can be a bit annoying if you didn’t want them. Some have tried to tell it “no subtitles” with mixed success. It’s a minor artifact and one we expect will be tunable in future.

Audio quality:

The addition of audio delights creators – hearing the characters speak and environments come alive is surreal. The voice quality is decent, though not on par with the very best dedicated text-to-speech. It tends to match the character (an old man will have an older gruff voice, etc.). There are some current bugs: “Some generations end up with no sound…and those still count against your credits”, one beta user noted. So occasionally you might get a silent video (especially if the prompt was complex) – likely a glitch in the preview version. Google will probably iron that out; in the meantime, early adopters had to just regenerate and maybe request a credit refund. When it works, though, users say lip-sync is on point and the ambient sound adds tremendous realism. In one shared clip of a “news anchor” speaking, people were impressed by the synchronization of the AI newscaster’s lips with the speech – a known hard task in deepfakes that Veo 3 handles within its generation.

Bugs and hiccups:

As mentioned, subtitle artifacts and occasional silent outputs are known issues. Another user reported that the last 6 generations they tried failed outright, calling the product “half-baked” at release for charging a high price while such fails occur. Indeed, Google kept Veo 3 in a sort of gated preview for Ultra users presumably because they expected some instability. There are also complaints about the UI/workflow – one person felt the interface is clunky, saying it felt like Google didn’t fully realize what they have with Veo 3, given the “shitty UI and minimal advertising”. That’s likely referring to the Google Labs Flow interface, which is functional but not yet as smooth as, say, Canva’s. It’s a common trade-off: Google exposes models early, but the polished user experience often comes from third parties (like Canva) or after iterations.

Another limitation noted: strict content constraints. A commenter “strigov” mentioned that even on the Ultra plan, Veo 3 was “strictly restricted” in terms of what it would generate and that the servers were highly loaded and unstable at times. This suggests that popular demand might occasionally cause slow generation times or queueing. Also the restrictions mean you can’t, for instance, generate anything violent, sexual, or too politically sensitive. If you try, you might just get an error or a very sanitized output. For most creator use cases this is fine (you probably shouldn’t be generating extreme content anyway, plus it might violate platform guidelines if you posted it). But creators should be aware – if Veo refuses a prompt, it’s likely hitting a safety filter. For example, requests for realistic public figures or certain medical scenarios might be blocked. Over time, these systems may allow more flexibility with proper safeguards (maybe requiring verification for some content).

Cost vs value:

Many creators acknowledge the steep cost – “insanely expensive once you use up your quota” – but at the same time, they acknowledge the value gained is huge. If an indie filmmaker can produce something that would have cost tens of thousands in VFX or sets, $250 (or even $1000) begins to look reasonable. However, for everyday content creation (social posts, YouTube vids), the costs need to come down for wider adoption. The good news is that we already see cost competition (Runway, Pika, etc., discussed next, are cheaper albeit with less quality). And historically, tech like this tends to become cheaper quickly. Google’s pricing might drop as the model moves from preview to general availability – there’s speculation that the $250 Ultra plan might be targeting a niche and could reduce in price after early adopter phase. In any case, many are trying Veo 3 through the free or cheaper channels (like the Canva/Leonardo route or the free trial credit route).

Overall, community sentiment is excitement tempered with a bit of wariness. On one hand, creators are thrilled by the creative freedom (“this is insane”, “unbelievable” are common exclamations). On the other hand, some express dread at the broader implications – e.g., how this could flood media with fake or synthetic content that’s indistinguishable from reality. For individual creators, though, the consensus is you should embrace the tool to amplify your creativity. As one filmmaker said, these AI tools are an “enabler, helping a new wave of filmmakers more easily tell their stories”, and we’re just at the beginning of understanding the full potential. The learning curve involves new skills (prompt writing, iterating with an AI, maybe a bit of Python if using the API) but nothing insurmountable – especially compared to the complexity of mastering real film equipment or advanced animation software. In effect, Veo 3 can be seen as a very smart “AI assistant” for visual content creation, one that every small creative team might soon have in their toolkit.

Alternatives and Competitors to Veo 3

With generative AI advancing on all fronts, it’s no surprise there are multiple tools aiming to do what Veo does (or portions of it). Creators should be aware of the market alternatives, both to compare capabilities and to find the right fit for their needs/budget. Here are some notable ones:

Runway Gen-2:

Runway ML is a pioneer in creative AI tools, and their Gen-2 model offers text-to-video and image-to-video generation. Runway Gen-2 launched publicly in mid-2023 and has been improving since. It can produce ~4 second video clips from a prompt and also allows you to input an image or existing video to guide the results. Key differences: Gen-2 currently does not generate audio – you only get silent video, so creators must add sound separately. Its visual fidelity is decent but generally not as realistic as Veo 3 for complex scenes. Gen-2 tends to produce artfully dreamlike or stylized outputs, which can be great for music videos or abstract content, but if you need true photorealism with physics, Veo has the edge. Runway’s big advantage is accessibility and price. It runs in the cloud via a web interface that’s user-friendly, and pricing is credit-based. For example, $12/month on the Standard plan gets you 625 credits (about 125 seconds of Gen-2 video). Some analysis pegs Gen-2’s cost at ~$0.05 per 4-second render – significantly cheaper per clip than Veo on Google. However, often you may need multiple tries to get a perfect clip (one user noted maybe 1 in 10 Gen-2 outputs are really usable, so effective cost per good clip was more like $0.50 for 4s). In any case, Runway is creator-friendly with features like a built-in video editor and green screen effects. They even have Gen-1 and Gen-3 (alpha) focusing on different aspects like longer durations and better coherence. If your budget is small, Runway is a great starting point. It’s especially popular for TikTok creators making quirky AI visuals or musicians making low-budget AI music videos. Just remember you’ll need to bring your own audio/music since it’s visuals only.

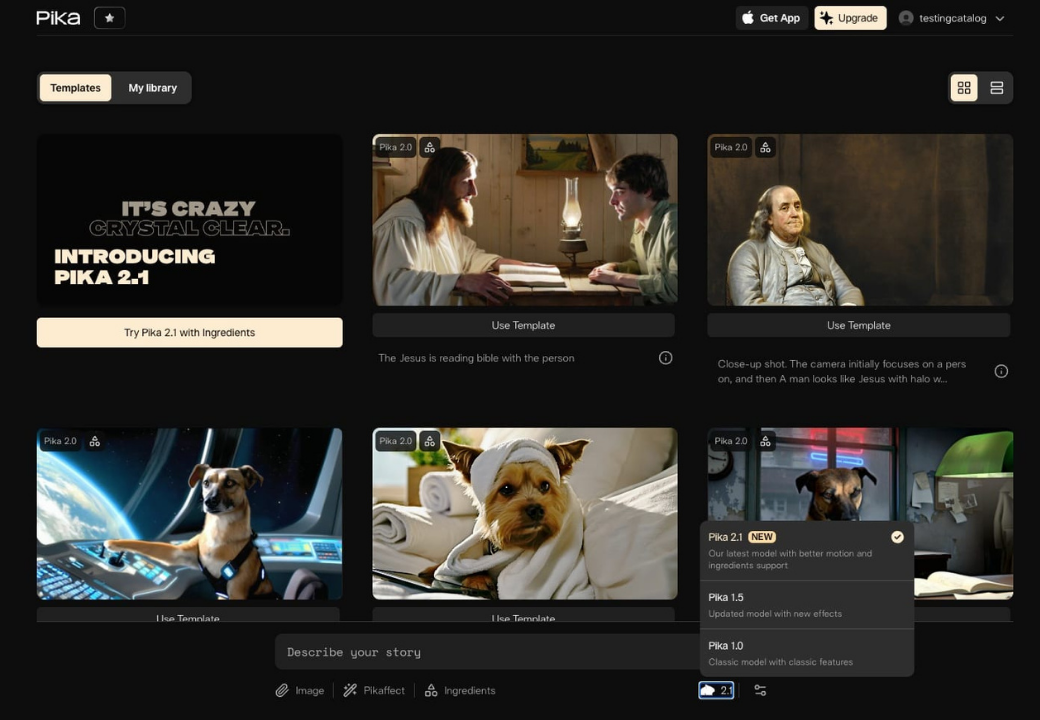

Pika Labs (Pika AI):

Pika is another rising tool, known particularly on Twitter and Discord communities for its fun text-to-video results. Pika Labs’ AI can take a text prompt or an image and generate a 4-second video (24 fps). Many creators use Pika to animate still images (for example, taking an artwork or a photo and giving it subtle motion or transforming it). It also has unique effects like “Poke it” or “Tear it” to add stylistic transitions. Pika’s outputs are typically a bit smaller resolution and more artsy/animation-like as opposed to cinematic realism. For instance, people use it to create looping animated art pieces or short meme videos. It’s available as an app (even on iOS/Android) and has a free trial model. Pricing: As of now, Pika offers some free usage and then paid tiers (the details often change, but it’s far more affordable than Google’s Ultra). For a creator who wants to generate quick B-roll for social posts or GIF-like videos, Pika is a nice tool. It’s also fast – generating a 4s clip often takes just a few seconds on their servers. The trade-off is you won’t get complex storytelling or audio. You also have less control over the outcome (it’s more for loose creative exploration). But Pika has garnered attention as a go-to for making AI “animations” on the fly. It could be seen as a complement: you might use Pika for simple needs (like an animated background) and Veo for heavy scenes.

Luma AI (Dream Machine):

Luma is known for its AI 3D and video tools – originally for photogrammetry (turning real photos into 3D models), but they’ve expanded into AI video generation. Luma’s Dream Machine allows text-to-video and image-to-video with a focus on short-form videos for platforms like YouTube Shorts, Instagram Reels, etc. They tout “professional-grade” visuals and allow both image animation (turn a static image into a dynamic video with camera moves) and pure text generation. Luma’s background in 3D means they might have unique strengths in camera consistency and depth – possibly better “scene geometry” so that camera pans look natural. For example, they demonstrate turning a single image of a landscape into a smooth fly-through video, using AI to imagine the in-between frames. Luma Dream Machine runs on web and iOS, with Android “coming soon”. Pricing: Luma had free credits during beta, but as one Redditor noted, it recently moved to paid-only for video gen. The exact pricing isn’t front-and-center, but expect a subscription or credit model (likely under $50/mo for moderate use). In comparisons, Luma’s output quality is good, though currently Veo 3’s photorealism with complex subjects seems superior. However, Luma might be better for certain types of shots, especially if leveraging their 3D knowledge (like a car drive-by scene might be handled well). If you create a lot of vertical videos or e-commerce spins of products, Luma could be interesting. It’s also an all-in-one mobile app for AI content (similar to what one might call an AI assistant app on Android/iOS for creative tasks).

Synthesia:

Shifting gears, Synthesia is an AI video generator of a different flavor – it specializes in presenter-style videos with AI avatars. Synthesia allows you to type a script and choose a digital avatar (a realistic human-like presenter) who will speak your script in a chosen language. It’s widely used for corporate training, marketing explainers, and localized video content. Capabilities: Synthesia does not generate arbitrary scenes or moving cameras; it’s usually a person on a plain background or a stock template, speaking to the camera. Think of it as a virtual spokesperson for hire. It also doesn’t generate audio beyond the avatar’s speech (and some background music you can add). So, it’s not directly comparable to Veo for cinematic storytelling. However, for business use cases (product demos, onboarding videos, tutorials), Synthesia is a strong alternative if you specifically need that human face delivering lines clearly and you want fine control of the script. Pricing: Starts around $18–30/month for a basic plan, which includes a certain number of video minutes (e.g., 10 minutes per month). It’s much cheaper than Veo for producing minutes of talking-head content. Also, Synthesia's quality in its niche is high – the avatars look quite real (though discerning viewers might notice they’re AI). Many small businesses use Synthesia for things like quick promo videos or multi-lingual messages (since it supports 120+ languages). If you are a marketer whose primary need is explainer videos with voiceover, Synthesia might be the more efficient tool. On the other hand, it can’t generate, say, an owl and a badger talking in a forest 🙂 – Synthesia is limited to their preset avatars.

ElevenLabs + Stock Footage:

This is not a single product but a mention of a DIY alternative: before Veo 3’s audio came along, creators would pair an AI video generator with an AI voice generator. For instance, one could use Runway Gen-2 or stable diffusion animations for visuals, then use ElevenLabs (a top-tier AI voice generator) to create a voiceover, and then manually sync them. Some might still prefer this route if they need longer narration or specific voice styles (ElevenLabs can clone voices or do long narration better). Also, traditional stock footage sites remain an alternative: sometimes the quickest way to get a generic clip is to buy/download one. However, stock libraries can’t offer the specificity of AI (you often won’t find exactly what you imagined), and costs per clip can add up too. So many content creators are weighing: should I search stock or just prompt AI? Increasingly, for unique shots, AI is winning out – though for very common shots, stock is still handy and might be more immediately polished.

Open-Source and Upcoming Models:

It’s worth noting the landscape is evolving fast. There have been open-source text-to-video attempts (like ModelScope’s early release) but their quality is far behind (very blurry 2-3 second GIF-like outputs). However, just as open-source image models (Stable Diffusion, etc.) caught up, we may see open video models in 2025–2026 that challenge the closed ones. Also, big players like Meta have demonstrated research models (e.g., Meta’s Make-A-Video and Voice+Video demos) – not publicly available, but indicating more competition. OpenAI reportedly has a video model in development (code-named “Sora”). Some community members compare Veo 3 with what they expect from Sora, often concluding that Veo is currently ahead but we’ll have to see the next version. For a creator making tool decisions now, Veo 3 and Runway are the leading edge, but it’s wise to keep an eye on new announcements in the “AI productivity apps 2025” space, since video generation will be a hot area. Tools like Kapr or LeiaPix (for 2D-to-3D animation) also occupy niches for short animated content. And for specific domains like gaming or VR, we might see specialized generators (NVIDIA, for instance, works on generative AI for game assets).

In comparing alternatives, consider integration and workflow. One reason Canva + Veo 3 is notable is the integration into a broader design suite. Similarly, Runway’s integration of Gen-2 into a video editor or Luma’s integration into a mobile app workflow can save time if those match your style. If you’re looking for an “all-in-one AI platform”, Google’s approach is to integrate all modes (text, image, code, video) under the Google AI umbrella (Gemini, Imagen, Veo, etc.) accessible via one account. This is somewhat analogous to how Microsoft is positioning Copilot across its Office suite. So, we may soon see deeper integrations – e.g., Google Slides might let you insert a Veo video generation directly into a presentation; or Android phones might get an app to generate videos on-device (though that might be further off due to processing needs). For now, the combination of Gemini + Veo 3 + others in Google AI Pro/Ultra is Google’s “all-in-one” offering, whereas Canva Magic Studio is a third-party all-in-one solution.

Choosing the right tool: If you are a YouTuber or creative storyteller, Veo 3 (despite the cost) currently delivers the most wow-factor and creative control for cinematic scenes. Runway Gen-2 is a solid second choice if budget is limited, especially for more abstract or artsy content (and it’s improving rapidly – Runway’s Gen-1 to Gen-2 leap was big, and Gen-3 is in alpha testing). If you are a small business marketer mostly needing talking presentations or product showcases, Synthesia or combos of simpler tools might suffice; but if you want to stand out with creative ads, investing in Veo 3 (directly or via Canva) could set you apart. For mobile-first creators (Instagrammers, TikTokers) who maybe do everything on phone, Pika or Luma’s apps might be the go-to for quick results on the move. Also, watch out for CapCut and other popular mobile video editors possibly adding AI video gen modules soon – it’s likely coming.

In summary, Google Veo 3 sits at the high end of quality and capability, particularly with its unique native audio generation and Google’s powerful AI backbone. It’s part of a trend of all-in-one AI content creation platforms (note those SEO terms: new AI tools for marketers, AI solutions for small businesses, AI productivity apps 2025 – all pointing to tools like Veo 3 that save time and open creative possibilities). While it’s early and the tool is premium, the trajectory is clear: tasks that once required large teams and budgets – like producing a video – can now be achieved by solo creators with AI assistance.

Google Veo 3’s relevance for digital creators is thus two-fold: practical productivity (make content faster, repurpose easily) and creative possibility (imagine visuals you couldn’t before). It’s a technology that can augment human creativity – provided we learn how to communicate our vision through prompts and embrace a bit of iterative experimentation. As the ecosystem grows (with competitors and evolving models), creators will have an expanding toolkit. But at this moment, Veo 3 is a frontrunner showcasing what the “must-have AI tools for YouTubers and content creators” are going to look like. It truly feels like we’ve entered the era where a single individual with the right AI tools can produce content on par with entire studios – and that’s as exciting as it is disruptive.

FAQs

Direct Ultra access costs far more than fifty bucks, but there are two budget-friendly routes. Canva Pro users get five Veo-powered clips a month inside an interface they already pay roughly $12 for—perfect for monthly promo videos or ad tests. New Google AI Pro subscribers can trial Flow for free, then continue at $19.99 per month with 100 monthly generations (audio availability may lag during preview). If you batch content and reuse each clip across platforms, that pricing easily outperforms buying comparable stock footage. Just plan prompts carefully so you don’t burn credits on discarded iterations.

Yes—if you can type a sentence, you can direct a Veo 3 scene. Canva’s implementation hides every technical knob: you enter a natural-language prompt, pick aspect ratio, and hit “Generate.” Google’s Flow offers more controls but still runs in a browser, no coding required. For dev-savvy teams, the Vertex AI API unlocks deeper automation, yet the basic creator experience remains drag-and-drop simple. That makes Veo 3 an accessible upgrade path from image generators, even for hobbyists or side-hustlers.

Start by lifting key quotes, stats, or visuals from your blog, webinar, or podcast script. Feed each snippet into Veo: “Animated chalkboard lists the three growth levers while upbeat voice reads them aloud.” In minutes you’ll have snackable clips for Reels, LinkedIn posts, or ad retargeting, all brand-aligned from the same base message. Because Veo handles audio, you skip separate text-to-speech steps, slashing production time further. The result: a single hero asset becomes a multi-platform campaign without hiring editors.

Google hasn’t released a standalone Veo app yet, but Flow runs smoothly in mobile Chrome and prompts sync with your Google account. Canva’s mobile apps already expose the “Create a Video Clip” button, so you can generate and post straight from your phone—arguably the best Android assistant workflow today. Luma and Pika offer dedicated apps, yet they lack Veo’s audio realism. Until Google ships a native app, a Canva-plus-Drive combo is the quickest mobile path to publish-ready Veo footage.

Veo 3 lets you generate cinematic B-roll, intros, and dialogue scenes on command, shrinking days of shooting into minutes of prompt-crafting. Native 4K visuals and synced audio mean your cutaways don’t look like filler—they feel like studio footage tailored to your script. Because clips render in eight-second bursts, you can iterate rapidly, lock in the perfect shot, then assemble sequences inside your editor. That speed-to-quality ratio is why early adopters say Veo 3 has already replaced stock footage libraries. If you’re chasing channel growth, fresh visuals without extra crew costs can be the difference between “just another upload” and a binge-worthy series.

Most 2025 “AI productivity” tools focus on text, images, or voice; Veo 3 merges all three in a single prompt. Where image generators hand you stills and voice tools give you narration, Veo spits out the whole movie clip—camera moves, sound design, even character speech. That one-stop output collapses a multi-app workflow into a single generation window, freeing you from export-import gymnastics. Add Google’s Flow director panel and you gain shot-by-shot control unavailable in lighter apps. In effect, Veo 3 rewrites the definition of “productivity” for visual creators, turning ideation and execution into back-to-back steps.

Yes – as of late 2023, ChatGPT (especially ChatGPT Plus) can create AI images thanks to the integration of OpenAI’s DALL·E 3 model. You don’t see a drawing interface or anything; instead, you simply ask ChatGPT in plain language to generate an image. For example, you might type, “Create an image of a cute robot reading a book under a tree,” and ChatGPT will output an image (or a set of image variations) matching that description. It all happens in the chat window. For free users of ChatGPT, this feature may not be available directly on the OpenAI site, but you can still access DALL·E 3 via Bing Chat (just ask Bing’s chatbot for an image, and it will produce one). The ChatGPT interface has an advantage: you can have a back-and-forth with the AI about the image. If the first result isn’t perfect, you can say “make it more cartoonish” or “add a sunset in the background,” and ChatGPT will refine the image. In our experience, ChatGPT is quite good at generating images that involve multiple elements or need some storytelling context, because it “understands” the request deeply through GPT-4. Just note that the resolution is moderate (not super high-res) and there are content limitations (it won’t create disallowed imagery). But for many everyday needs – concept sketches, simple illustrations, social media visuals – ChatGPT can indeed serve as an image creator in addition to being a text assistant. It’s like having a conversation with an artist who can draw what you describe.

If we’re talking specifically about creating short vertical videos for TikTok, Instagram Reels, or YouTube Shorts, tools that automatically find and format highlights are the most helpful. Opus Clip is one of the best for this – it detects compelling moments in a longer video and outputs ready-to-post vertical clips with captions. Klap is another strong choice, very similar in function. Both of these save tons of time compared to manual editing. If you prefer doing some editing yourself but want AI assistance, CapCut (mobile app) offers auto-captions and some smart editing templates, and Veed (web app) provides easy editing with features like auto subtitles and filters. So the “best” might be Opus Clip for full automation, but if you need a free solution, CapCut is a great mobile-friendly alternative. It also depends on your content – try a couple and see which output you like more.

Yes – many creators successfully use AI avatar videos on YouTube and other social platforms. These tools let you produce talking-head marketing content quickly. Viewers tend to respond well as long as your avatar is engaging and your message is on point. The key is to keep videos concise and ensure the script feels authentic. Done right, AI avatar videos can boost your content output without losing audience interest.

.png)